Preamble

This article is the third one of a series of four articles dedicated to the serverless and containerization concepts and how it can be applied in the context of a typical web application involving:

- A Content Management System (CMS) using Strapi.

- A public facing front end application using Gatsby.

- A web analytics tool to measure the behavior of users on our website using Umami.

The serverless/containerization concept that will be concretely implemented throughout these articles will use the above frameworks/tools, though the same principles can be most likely extended to others, let alone the time it takes to adapt them.

Introduction

Let us now continue our journey towards a web application completely free of provisioned servers. The purpose of this post will be to set up a web analytics tool (Umami) in a serverless fashion, with a Pay-per-use subscription in Azure. After all, if my website does not have any traffic 50% of the time, then there should be no reason to have it running on a provisioned server 100% of the time, reducing both cost and energy consumption.

The concept we will adopt here then, is the following:

Instead of sending web analytics to an actual instance of Umami, they are first sent to an Azure Service Bus Queue. On a daily basis (or any other periodical basis), an Azure Function is triggered, that will retrieve all web analytics stored in the queue and forward them to an instance of Umami that will have been specifically spun up to collect the requests. Once all the messages have been processed, the instance of Umami is spun down. In order to achieve this goal, we’ll need to set up a few Azure resources, add a bunch code to do the work.

In order to get consistent data, we will also have to use a custom version of Umami that allows us to set the timestamp on events.

NB: Functionally speaking, one of the downside of this solution, is that there is no possibility to see current visitors in real-time, as the data will only be available after the periodic processing of queued messages.

Overview

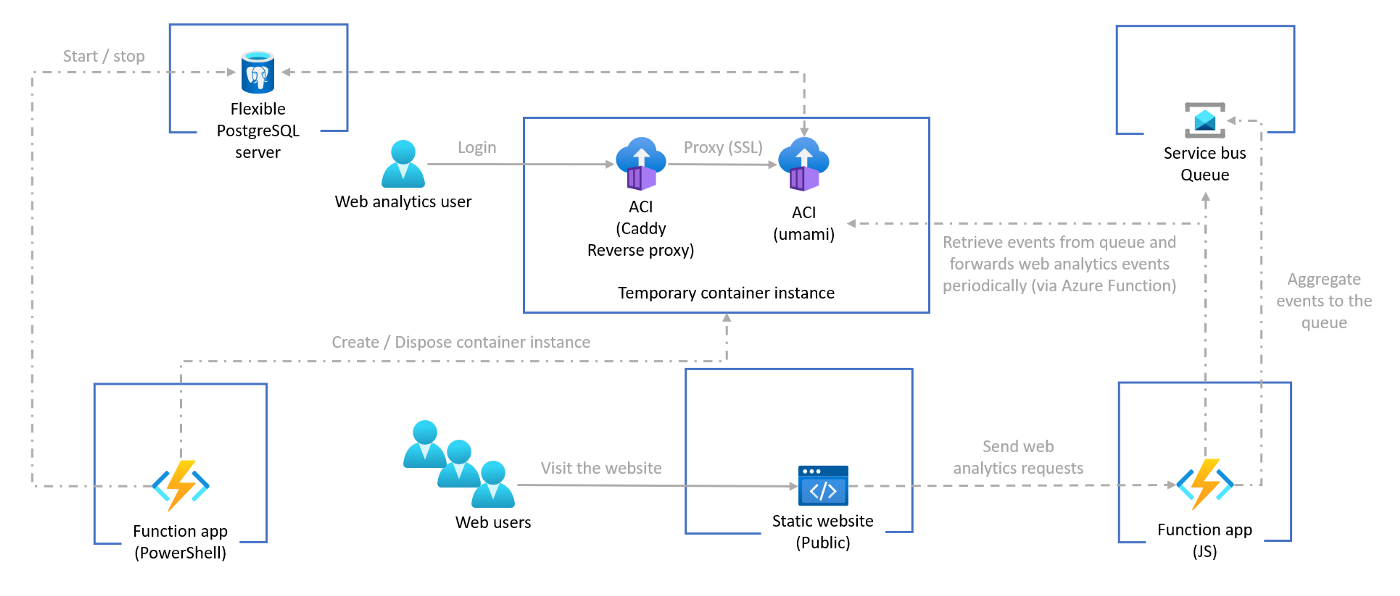

Below is an overview of the architecture of the system that we will partly deploy via Terraform:

The different entities:

- A Static web app for our public facing web application.

- A JavaScript Function App acting as our static web app API, mainly used to queue up messages to the Service Bus queue and forward them to Umami.

- A JavaScript Function App with time triggers, that will ensure that export of the messages in the queue is done periodically. These functions are calling the functions from the Function App above.

- An ACI containing our running instance of Umami served by a reversed proxy (Caddy).

- A flexible PostgreSQL server whose advantage is that it can be started/stopped on demand to lower the TCO.

- An Service Bus Queue that will be used to collect page views/events from users visiting our website and store them until further processing is done.

- A PowerShell Azure Function that allow us to start/stop our ACI and flexible server

Umami

Umami is a great JavaScript web analytics tool, which I have chosen for this use case because it is open source, simple, and easy to work with.

However, there was one challenge in having no provisioned server running Umami: The timestamps for sessions, page views and events are set internally when receiving requests by the web analytics tool. For that reason, I had to change a few parts of the code and allow the requests sent to Umami to set the timestamp instead. That way the data stored in Umami will be equivalent to the data sent in real time when it comes to sessions, page views and events.

NB: The timestamps are not set by the users’ requests directly, but by the Azure function forwarding them to the queue instead, preventing any tampering of data in the process.

Once this was done, all that was left was to create a docker image containing the changes so that it can be used in the ACI.

NB2: As explained in previous posts, there is also a way for a user to spin up an instance of Umami by visiting a URL and let him know when the instance is ready.

Static web app

Another important point is with the tracker used to send data to the Umami instance.

Instead of fetching this script from the Umami server, we will include it directly in the files of our static app, change the root URL of the calls and make it call our Azure function API instead.

Azure functions

There are three Azure Function apps in our solution.

One of them is using PowerShell, which makes it easy to run automation tasks in Azure thanks to the Azure Az module. We will basically use it to start / stop our ACI and flexible PostgreSQL server.

The second one is backing our static web app and will perform the logic of: receiving the tracking requests, setting the timestamps, sending them to the queue.

The third one contains two time triggered and one http triggered functions, and they will be in charge of making sure that the daily export happens and that the messages are forwarded from the queue to Umami.

NB: For the export, keep in mind that there might potentially be a lot of request to forward from the queue to Umami. This means that the Azure function might timeout before all messages are processed (maximum timeout is 10 minutes for the consumption plan).

In order to workaround that, the Azure function only processes up to 100 requests, and call itself in a loop, until all messages are processed.

Trying it out

If you want to try it out, the code is available here and the README contains the necessary steps and instructions in order to deploy the solution.

Most of the work is done by the Terraform scripts including the set up for most of the environment variables for the different resources. However a few manual steps are needed and require running a few edits or command lines:

- Create a website on Umami, retrieve the website id and add it on the script of the static web app where the tracker is included.

- Initialize the database and its schema so it is ready to be used by Umami.

- Deploy the code to the static web app and its API Function app

- Deploy the PowerShell Http Trigger Azure Function code to the Function App

- Deploy the JavaScript Time trigger Azure Functions code to the Function App

Results

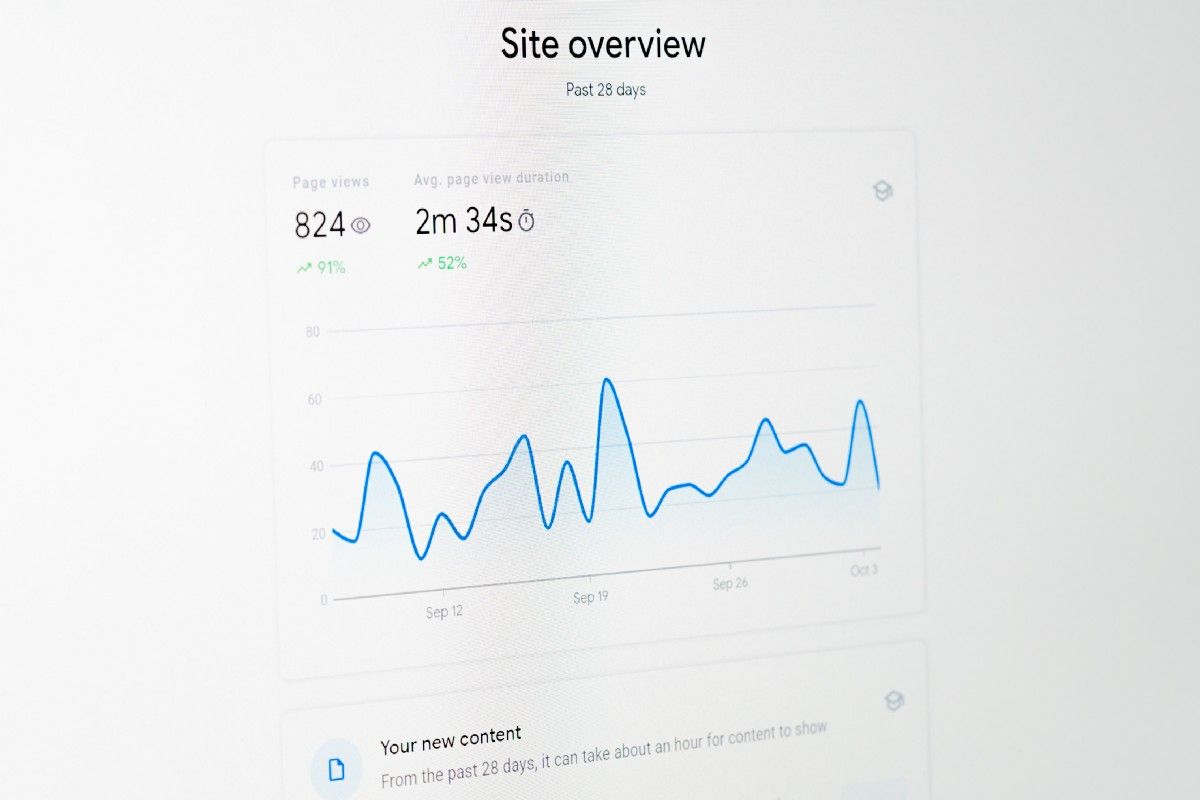

I have only tested this solution in the context of a Gatsby static web app for demonstration purpose. With this in mind, I asked a few people to visit the website and click around in order to gather some data and tested a few export of batched tracking requests to see how the Azure functions and the infrastructure behaved.

As far as I could see it seems to behave as expected and it can handle the load without any apparent issues. Messages are forwarded from the queue to the Umami at the speed of ~350 requests/min, which means that 10 000 requests would be exported in ~30 min. All requests are sent synchronously, so it might be possible to improve the performance by sending them asynchronously, but I haven’t tried it myself. Also, having a better performance ACI or PostgreSQL database might play out as well.

Anyhow it might be good to see how it would behave on the longer term as well.

I will update this post if I run this solution for a website in production.